A Framework for R&D

Impactful innovation is what Research & Development (R&D) teams around the world are working hard towards. Yet, there don’t seem to be blueprints that are reused and trusted across companies. On one hand, we get very little visibility into this process and on the other, it seems very challenging to define and measure success.

I love challenges, the harder the better. And the R&D challenge while also building sustainable businesses or growth is something I’ve been thinking a lot about for a while. So here are my thoughts and some of the things I’m experimenting with at Splice. It will take some time for me to confirm, self-correct and codify a solid, empirical approach but I like the idea of documenting my findings and experiments as a way to break the cycle and to keep myself honest. I will start by mentioning some of the main challenges that R&D teams must surmount and will then share my own framework.

R&D is just a label for accelerated and impactful innovation

The first think I’d like to clarify is that R&D is just a label. It’s not a goal, it’s not even a strategy, it’s a label used to refer to things moved out of the normal process with specific expectations. The objective is clear tho: companies invest in “R&D” because they usually want accelerated and impactful innovation. The short version of the rational is that it’s really hard to focus on delivering on short term objectives (OKRs/KPIs) while also exploring risky hypotheses. So comes a time when companies decide to have teams focused solely on “innovation”. Hoping that by isolating them from the day-to-day concerns, they can find breakthroughs that will empower the business and make a difference.

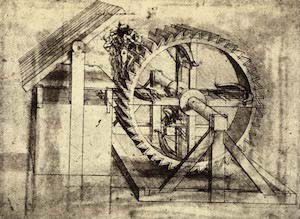

Leonardo da vinci, Crossbow Machine

Leonardo da vinci, Crossbow Machine

Before going further, I’d like to define a few useful terms used in the rest of this document:

- experiments: measurable procedure undertaken to make a discovery, test a hypothesis, or demonstrate a known fact without being sure of the eventual outcome. Concretely, that usually means building and running some sort of prototypes and measuring its impact.

- learnings: answers to questions we might have or discovered as we went. Learnings reduce the unknowns around a specific topic.

- local vs. global innovations: by local innovations, I refer to innovations being made by the teams working on the current roadmap. Global here, means outside of the scope of the roadmap, innovations that might span across teams or even outside the current roadmap.

- local exploration: refers to the discovery phase done by teams already focusing on a particular domain area.

Discussing with many industry R&D leaders and driving myself this effort at Splice, I defined three significant challenges:

Primary challenge: making decisions

This seems like an obvious challenge but yet it took me a while to grasp how critical it is to understand and plan for this challenge. Being in charge of bringing accelerated and impactful innovation sounds exciting, but where do we start, why, how do we communicate that? Are we too focused on “local innovations” vs big picture innovations? Making any kind of decisions, technical or not, is much harder in a context without clear directions, guide rails and metrics. Especially if we don’t have a way to evaluate success/failure.

Secondary challenge: what does success look like?

This is the biggest question I faced and one that I felt most compelled to answer before committing resources to R&D at Splice. Because innovation isn’t always tangible and because people within our organizations have different expectations, I wanted a clear framework of evaluation. Selfishly, I wanted to know if I was doing a good job or not, and I wanted to be able to have this discussion with others using a baseline we all agreed on. There is another aspect to this challenge: if we do find an adequate approach to R&D, we can create a framework by which to make decisions. Not all decisions and high fives in our framework would necessarily lead to “breakthroughs” or features shipped to prod. On the contrary, success lies also in the ability to decide on what shouldn’t be shipped or better yet, not worked on at all. Success is about decisions we manage to make, and in that sense, everything we prove we shouldn’t do, must also be celebrated as a huge win!

Third challenge: communication

There is so much stigma around R&D that communication is a huge part of what R&D teams should excel at. It might sound counter productive to many, especially if you imagine a R&D team as a bunch of crazy scientists running experiments in a basement lab. Turns out most R&D teams work very much in isolation and rarely interface with Product or Engineering. When they do, the relationship doesn’t have deep roots, and it is often perceived as a distraction. We end up with 2 common scenarios:

- R&D team doesn’t feel like they are shipping anything and aren’t valued.

- The Product & Engineering teams feel that they are forced to change their roadmap to ship (sometimes subpar) experiments that they have to maintain.

These 2 scenarios can be avoided if we all work on the same objectives, reduce our ego to a minimum and provide value to each other.

R&D is removed from the day-to-day so that the team can focus on out of band hypotheses. But they also want to see their work being shipped and be impactful. To accomplish that, finding amazing actionable learnings isn’t enough. Work needs to be done to have everyone in sync, expose valuable findings to other teams and build the relationships to make sure these findings make it to the roadmap. You can’t be impactful if you are totally isolated, building those bridges is absolutely critical so you can become and champion and find other champions within the organization.

Experiment: developing an R&D framework

Here is the framework I put together for Splice and something I am still tweaking. It’s far from perfect but it tries to address some of the challenges I highlighted earlier.

Process: Have stakeholders define one or more key hypotheses. For each hypothesis agree on desired learnings, budget and timeline.

Output: Regular communication, actionable (and documented) learnings, product briefs that summarize the research and make suggestions and finally prototypes/demos that helped us come to our conclusions and help others “feel” the potential.

Success evaluation: stakeholders evaluate the extracted learnings and how it impacts the roadmap.

Stakeholders

R&D is a service to the company and having a “Braintrust” to help steer, bring a different perspective and champion your work is a huge advantage. This is similar to Pixar’s approach to creative guidance. R&D doesn’t technically report to the stakeholders, they are a support mechanism. Those experienced experts work on their own challenges but come together to discuss how innovation can help the company, discuss hypotheses that aren’t explored but could be extremely impactful. Finally, they help refine the direction to maximize the impact on the work on the roadmap. Those stakeholders have to be influential people who can see the great big picture and can express candid feedback. I chose 3 stakeholders, depending on how your company works, you might pick other stakeholders:

Product Partner: Looks past our current roadmap and finds areas that if explored would help us make better decisions.> Business Partner: Looks at the current & future economical situation, helping define business opportunity areas to explore.> Transfer Partner: Makes sure learnings are properly transferred. This stakeholder is also the best judge of what should be explored locally vs by a dedicated future-looking team.

Main Hypothesis

Together we define a big hypothesis we want to explore. The hypothesis will be our North Star for a few months. From there, we define desired learnings to focus on. Those desired learnings will be used to extract initiatives but also to evaluate our work.

Let’s say our business provides services to the elderly. We’ve been focusing on a watch type device to monitor their health and falls. Our product is backed by a subscription service. We have a solid roadmap to execute on this vision, but we also believe that innovation could have a huge impact.

Hypothesis: “We believe that leveraging home automation integration would bring children and parents closer, thereby increasing the mental health of both groups.”

Context: The business partner is very interested in a potential new revenue line, the Product partner is thinking about next year’s roadmap and the feasibility of such an approach. Everybody is thinking about differentiating themselves from the competition as well as the potential ROI. Everybody is excited about the project but there are way too many unknowns to add this project to the roadmap.

Desired learnings

- Who are the main home automation providers, what does it take to integrate with them?

- What kind of experiences can we provide to our users?

- What’s the technical investment required to build/ship a first experience on the biggest platform? How about integrating with other platforms?

- How to measure usage, traction and the relationship between our 2 groups?

- What are ways to monetize such a service if we were to ship it?

Those desired learnings come from discussions with the R&D stakeholders. They need to be able to answer the obvious questions the teams would have if the hypothesis is confirmed and the stakeholders decide to add a related project to the roadmap. The goal isn’t to have something ready to ship to users, but to have gathered enough facts so that the company has enough visibility to make the right planning decisions. The decision might be as simple as whether or not to pursue the opportunity pursue an opportunity, or should we not. Both outputs are great since they are decisions and decisions are what help companies move forward. Also, proving that we should not do something will save a huge amount of time and money, as well as solidify the direction the company is taking.

Budget and timeline

We defined a North Star and we have specific questions we’d like to answer but we also need to timebox our research. This is a very important phase because we are setting the expectations for the team and for the rest of the company. We aren’t building a product we are shipping to all our users, we are building confidence in whatever direction the company will take. This is an interesting exercise which helps the stakeholders and the R&D team define how much we want to invest in the hypothesis and each desired learning. Concretely that avoids treating R&D as a black box with results coming at unexpected times. It also gives an opportunity for the stakeholders to change focus at the end of a budgeted period. I hear Google X works a bit like that, each year, each project lead presents their team progress and asks for a renewal or increase of budget. This is somewhat similar except at a smaller scale. We need to evaluate more than one hypothesis per year and need to influence the current business instead of creating brand new ones.

Output

The goal is to have significant impact on the future of the company and we do that by influencing the roadmap. So our output has to be supporting this goal. In most companies, the roadmap is managed by a Product organization. We therefore need to provide them with what they need to understand and decide if altering the roadmap makes sense. There are 3 main forms of output that seem to help:

- Product Briefs— use the Product language to summarize learnings. It’s a bit awkward at times, since we are answering a question and making a recommendation but don’t have a full integration plan, that would be something the Product team would do later on. However awkward it might feel to non Product people, it is super important to speak a common language. I used a simple template that I’ll refine as I go, here are some of the sections: the idea in one sentence, goals, counter-goals, opportunity, hypothesis, use cases, key open questions, key open questions, suggestions. And finally a detailed breakdown of the experiment, with links to the research, code and demos.

- Prototypes/demos— whenever possible, providing people an opportunity to experience themselves what we could build together.

- Documentation, lots of documentation and examples — there are two reasons for that, first the product briefs don’t go into all the details of what we learned and tangents discoveries. Secondly, because if the learnings turn into a solution that will make it to the roadmap, you want to provide all teams (eng, design etc…) with as much information as possible so they don’t waste time and feel confident they can work within a certain timeline.

Conclusion

If you are looking into building an R&D team or are considering working with one, consider defining a process around it. Have clear discussions about expectations and accountability. While leaders often love investing in “Innovation”, the meaning and expected output are often too unclear to set an R&D team up for success. Instead of expecting such a framework to be delivered to you, consider offering the framework you think would help the company and the R&D team to stay aligned. It won’t be perfect, and will need to be tweaked, don’t overthink it, R&D the “R&D process”. Learn, evaluate and adapt, the company and the team will feel and be motivated by the impact.